if (!require("pacman")) install.packages("pacman")

pacman::p_load(

here, qs, # file management

magrittr, janitor, # data wrangling

easystats, sjmisc, # data analysis

gt, gtExtras, # table visualization

ggpubr, ggwordcloud, # visualization

tidytext, widyr, # text analysis

openalexR,

tidyverse # load last to avoid masking issues

)Text processing in R

Session 08 - Showcase

Preparation

Codechunks aus der Sitzung

Erstelle Subsample

review_subsample <- review_works_correct %>%

# Eingrenzung: Sprache und Typ

filter(language == "en") %>%

filter(type == "article") %>%

# Datentranformation

unnest(topics, names_sep = "_") %>%

filter(topics_name == "field") %>%

filter(topics_i == "1") %>%

# Eingrenzung: Forschungsfeldes

filter(

topics_display_name == "Social Sciences"|

topics_display_name == "Psychology"

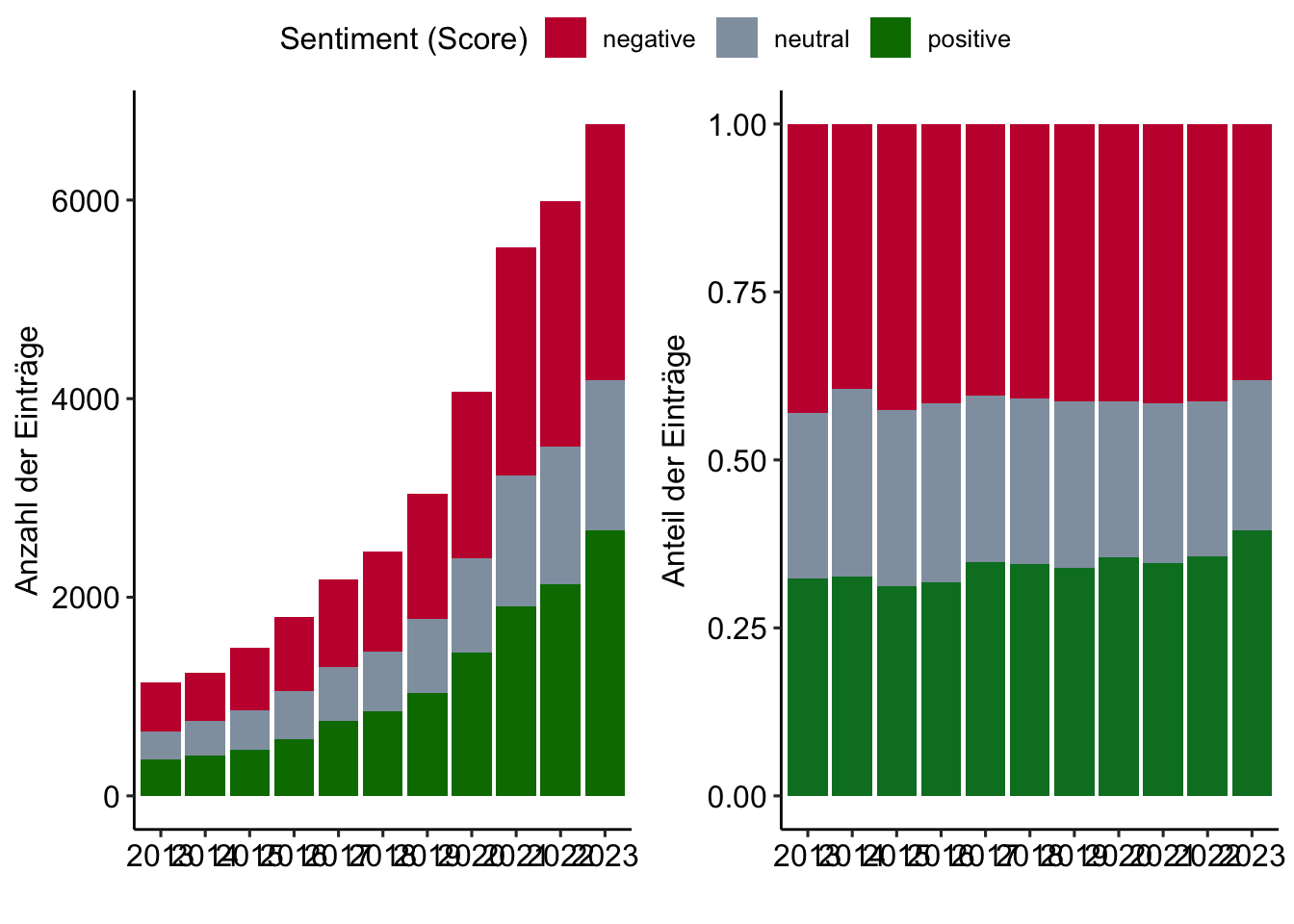

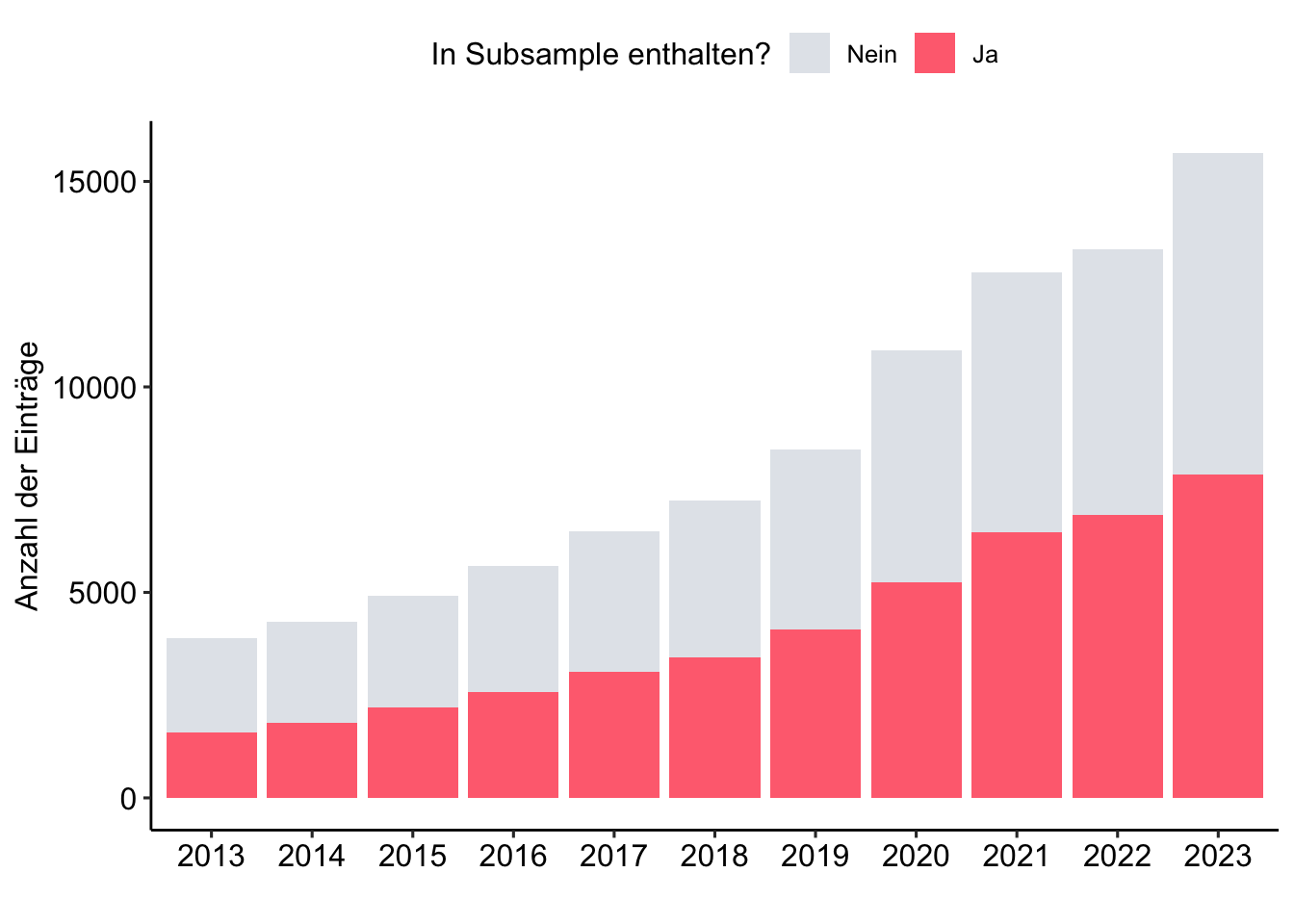

)Subsample im Zeitverlauf

review_works_correct %>%

mutate(

included = ifelse(id %in% review_subsample$id, "Ja", "Nein"),

included = factor(included, levels = c("Nein", "Ja"))

) %>%

ggplot(aes(x = publication_year_fct, fill = included)) +

geom_bar() +

labs(

x = "",

y = "Anzahl der Einträge",

fill = "In Subsample enthalten?"

) +

scale_fill_manual(values = c("#A0ACBD50", "#FF707F")) +

theme_pubr()

Tokenization der Abstracts

# Create tidy data

review_tidy <- review_subsample %>%

# Tokenization

tidytext::unnest_tokens("text", ab) %>%

# Remove stopwords

filter(!text %in% tidytext::stop_words$word)

# Preview

review_tidy %>%

select(id, text) %>%

print(n = 10)# A tibble: 4,880,965 × 2

id text

<chr> <chr>

1 https://openalex.org/W4293003987 5

2 https://openalex.org/W4293003987 item

3 https://openalex.org/W4293003987 world

4 https://openalex.org/W4293003987 health

5 https://openalex.org/W4293003987 organization

6 https://openalex.org/W4293003987 index

7 https://openalex.org/W4293003987 5

8 https://openalex.org/W4293003987 widely

9 https://openalex.org/W4293003987 questionnaires

10 https://openalex.org/W4293003987 assessing

# ℹ 4,880,955 more rowsVergleich eines Abstraktes in Rohform und nach Tokenisierung

review_subsample$ab[[1]][1] "The 5-item World Health Organization Well-Being Index (WHO-5) is among the most widely used questionnaires assessing subjective psychological well-being. Since its first publication in 1998, the WHO-5 has been translated into more than 30 languages and has been used in research studies all over the world. We now provide a systematic review of the literature on the WHO-5.We conducted a systematic search for literature on the WHO-5 in PubMed and PsycINFO in accordance with the PRISMA guidelines. In our review of the identified articles, we focused particularly on the following aspects: (1) the clinimetric validity of the WHO-5; (2) the responsiveness/sensitivity of the WHO-5 in controlled clinical trials; (3) the potential of the WHO-5 as a screening tool for depression, and (4) the applicability of the WHO-5 across study fields.A total of 213 articles met the predefined criteria for inclusion in the review. The review demonstrated that the WHO-5 has high clinimetric validity, can be used as an outcome measure balancing the wanted and unwanted effects of treatments, is a sensitive and specific screening tool for depression and its applicability across study fields is very high.The WHO-5 is a short questionnaire consisting of 5 simple and non-invasive questions, which tap into the subjective well-being of the respondents. The scale has adequate validity both as a screening tool for depression and as an outcome measure in clinical trials and has been applied successfully across a wide range of study fields."review_tidy %>%

filter(id == "https://openalex.org/W4293003987") %>%

pull(text) %>%

paste(collapse = " ")[1] "5 item world health organization index 5 widely questionnaires assessing subjective psychological publication 1998 5 translated 30 languages research studies world provide systematic review literature 5 conducted systematic search literature 5 pubmed psycinfo accordance prisma guidelines review identified articles focused aspects 1 clinimetric validity 5 2 responsiveness sensitivity 5 controlled clinical trials 3 potential 5 screening tool depression 4 applicability 5 study fields.a total 213 articles met predefined criteria inclusion review review demonstrated 5 clinimetric validity outcome measure balancing unwanted effects treatments sensitive specific screening tool depression applicability study fields high.the 5 short questionnaire consisting 5 simple invasive questions tap subjective respondents scale adequate validity screening tool depression outcome measure clinical trials applied successfully wide range study fields"Count token frequency

# Create summarized data

review_summarized <- review_tidy %>%

count(text, sort = TRUE)

# Preview Top 15 token

review_summarized %>%

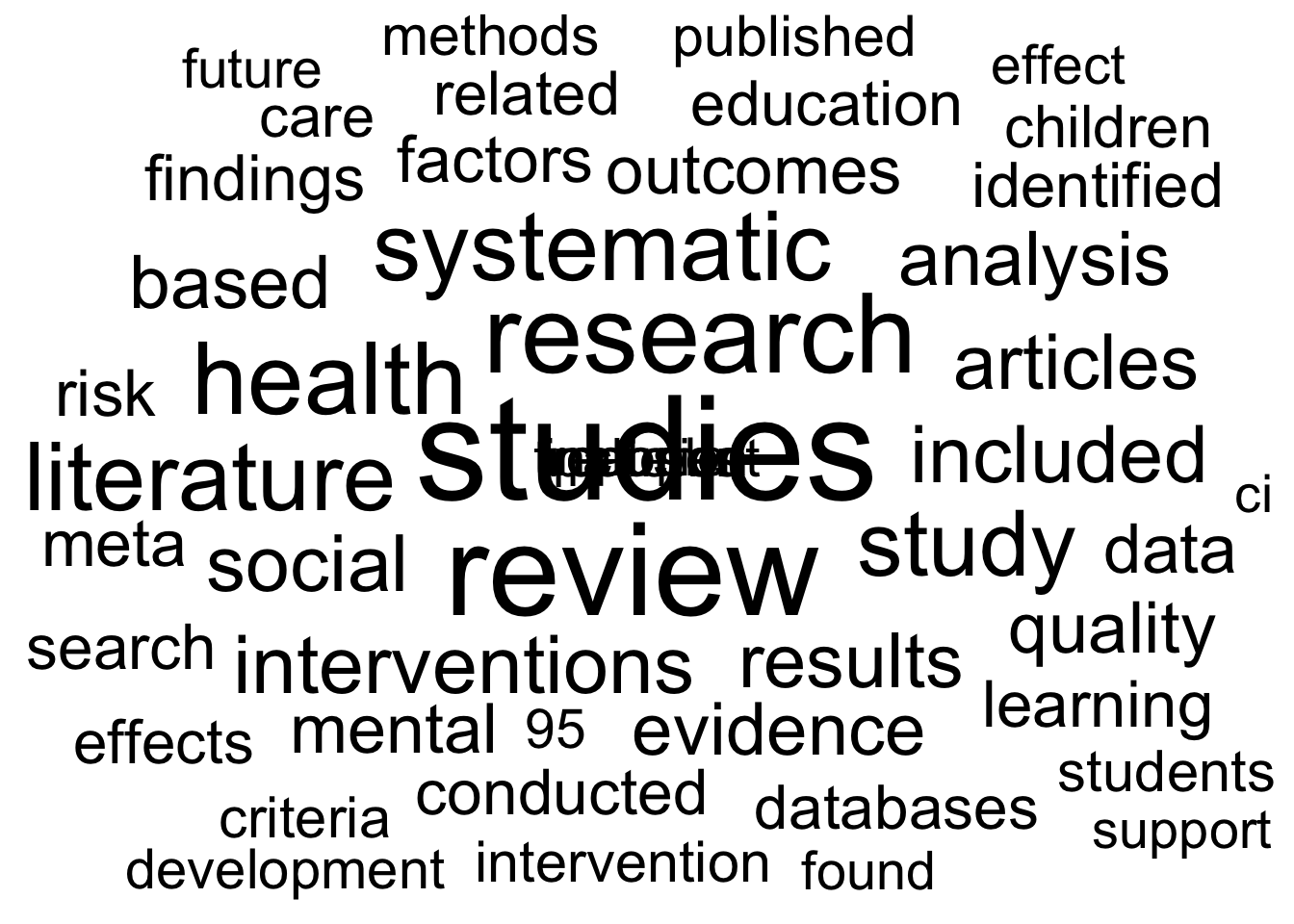

print(n = 15)# A tibble: 122,148 × 2

text n

<chr> <int>

1 studies 73398

2 review 57878

3 research 42689

4 health 35108

5 systematic 32431

6 literature 31374

7 study 29012

8 interventions 22731

9 included 21987

10 social 21528

11 articles 20631

12 results 20166

13 analysis 19624

14 based 18929

15 evidence 18545

# ℹ 122,133 more rows